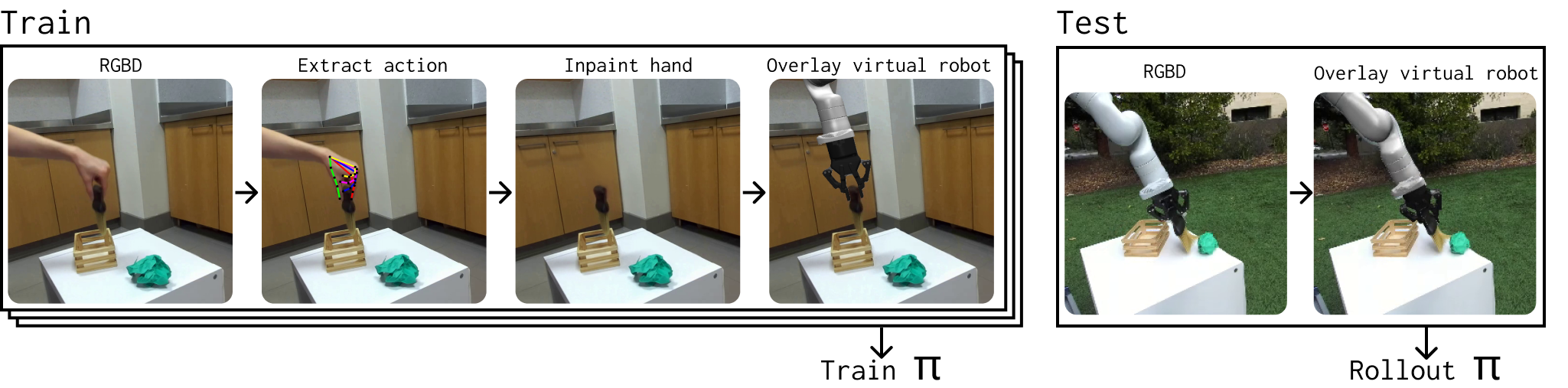

Overview of our data-editing pipeline for learning robot policies from human videos. During training, we first estimate the hand pose in each frame of a human video demonstration and convert it into a robot action. We then remove the human hand using inpainting and overlay a virtual robot in its place. The resulting augmented dataset is used to train an imitation learning policy, π. At test time, we overlay a virtual robot on real robot observations to ensure visual consistency, enabling direct deployment of the learned policy on a real robot.

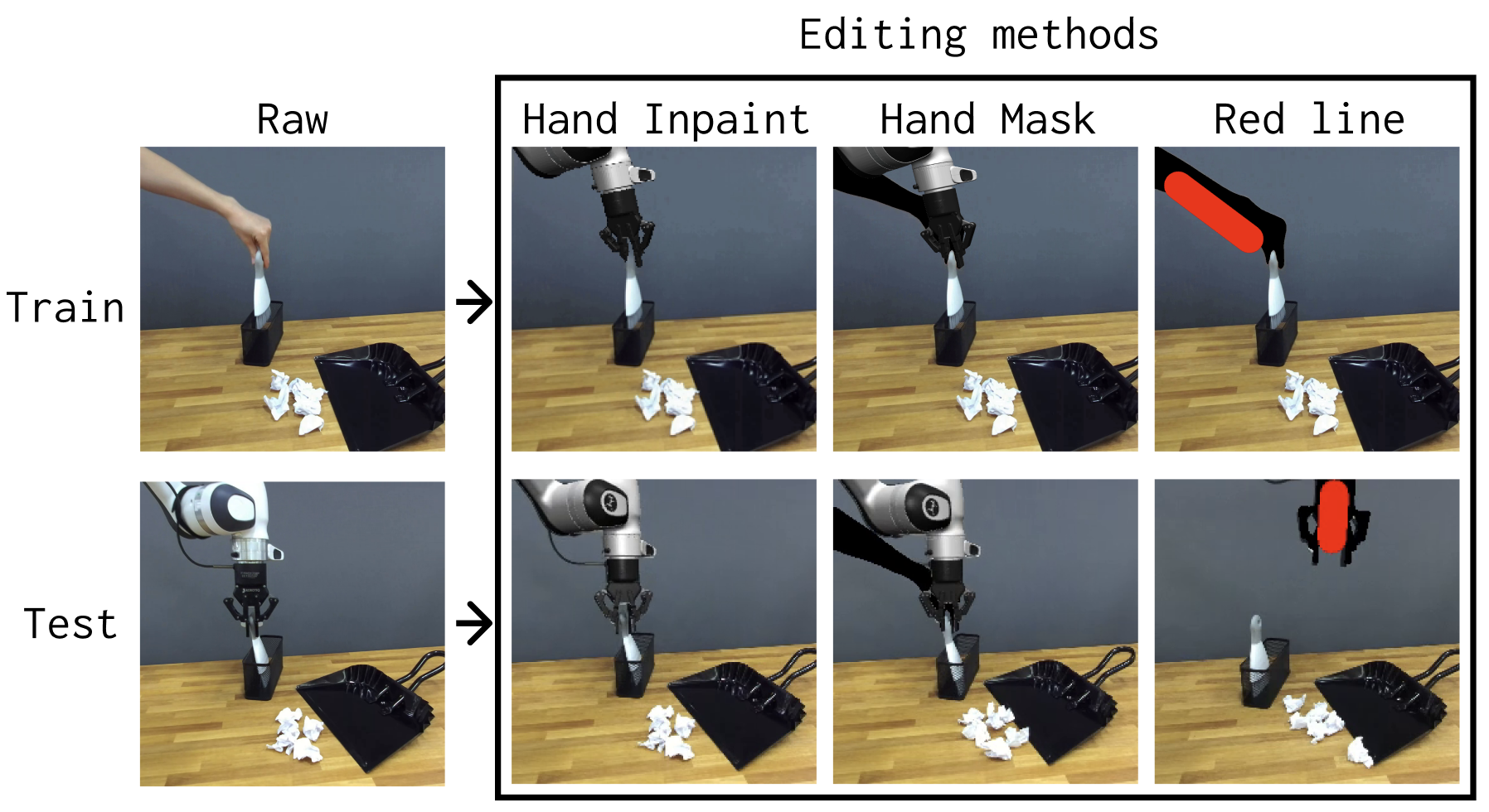

Our method is robot agnostic. As shown below, each human video can be converted into a robot demonstration for any robot capable of completing the task. In our experiments, we deploy our method on two different robots: Franka and Kinova Gen3 (both with robotiq gripper).